If you're working on something real — let's talk.

© 2026 Lampros Tech. All Rights Reserved.

Published On Jul 09, 2025

Updated On Jul 14, 2025

Every swap, vote, or contract call leaves a trace on-chain.

But raw blockchain data is chaotic. Logs are dense, formats vary across networks, and context is often missing. Making sense of it all reliably and at scale takes more than a dashboard tool.

Building a Web3 data pipeline means building for decentralisation. No clean APIs or structured records, just blocks and logs waiting to be decoded.

This guide walks through how to build a production-grade pipeline: from ingestion and indexing to transformation, storage, and query layers.

Whether you’re tracking protocol health, surfacing user behaviour, or enabling real-time alerts, this is the infrastructure that makes it possible.

Let’s get started.

Building your own Web3 data analytics pipeline in 2025 gives you full control over how on-chain data is collected, processed, and used.

With over 200+ active L2 and rollup ecosystems, and a growing number of modular execution environments like zkVMs, optimistic stacks, and app-specific chains, data is no longer standardised or easy to index.

Relying on off-the-shelf dashboards or third-party APIs means:

Owning your pipeline gives you more than just better visibility; it gives you end-to-end control across four critical dimensions of Web3 analytics:

The surface-level utility of dashboards is easy to adopt. But true data leverage comes from building under the surface.

Teams that understand and control their full pipeline from logs to labelling don’t just observe trends, they shape strategy.

In this next section, we’ll unpack the modular systems that make that control possible.

Web3 data is chaotic. Each chains follow different log formats, contracts emit custom events, and even failed transactions hold valuable context that must be captured and interpreted.

To turn this raw activity into structured insights, you need a modular pipeline built for on-chain complexity.

Here’s what that stack looks like:

This is the entry point of your pipeline. It connects directly to blockchain networks to ingest raw data in real time or batches.

Raw logs are not enough. You need to extract meaningful events, decode function calls, and build relationships between contracts and addresses.

This layer takes raw blockchain logs and turns them into structured, reliable tables ready for analysis.

Stores structured data for fast querying and long-term access.

Makes data accessible to internal teams, products, and dashboards.

Keeps the system operational and proactive.

These components form the backbone of any serious Web3 analytics system. You can start lean, but the real value comes when the system scales with your data and grows with your needs.

Let’s explore how to design that system, the architecture, design patterns, and trade-offs that matter.

In emerging modular stacks, indexing is no longer an afterthought; it’s infrastructure.

Rollup-native indexers, off-chain compute, and ZK-proof generation pipelines are reshaping how data is structured and verified.

A well-designed pipeline is more than just a stack of tools. It’s an architecture that balances reliability, cost, performance, and adaptability, especially in Web3, where chains, contracts, and data volumes shift constantly.

It’s about choosing the right design patterns, ones that handle chain fragmentation, real-time ingestion, modular systems, and constant schema evolution without breaking.

Below are the architectural patterns that production-grade teams are using in 2025.

Blockchains are inherently event-driven systems. Every block contains a stream of state-changing transactions, and every transaction emits logs that represent on-chain activity.

EDA aligns perfectly with this model by treating each emitted event as a trigger for downstream processing.

This architecture enables real-time responsiveness, where actions like indexing, alerting, or enrichment happen as events arrive, rather than on a delayed schedule.

Core Pattern | Tooling | Benefits |

|---|---|---|

Ingest raw chain data in near real-time | Kafka for high-throughput streaming | High modularity (independent consumers) |

Parse key events (Transfer, Swap, Deposit) | RabbitMQ / Redis Streams for lighter loads | Horizontal scalability |

Process asynchronously via enrichers, storage workers, and alert systems | Pub/Sub (GCP) for serverless scaling | Built-in failure handling and async retries |

Used by: High-performance protocols with large contract surfaces or cross-chain behaviour, e.g., DEX aggregators, modular DAOs, and restacking protocols.

Blockchain data is generated continuously, but insights often require both immediate reactions and historical context.

Lambda architecture combines real-time streaming with batch processing to handle both.

This design pattern is ideal when protocols need low-latency alerts or dashboards, but also require periodic reprocessing to correct errors, recompute derived metrics, or update schemas as contracts evolve.

Core Pattern | Tooling | Benefits |

|---|---|---|

Speed Layer: Real-time data processing via streams | Apache Spark for distributed batch jobs | Tracks token logic like rebases or rewards |

Batch Layer: Periodic reprocessing for consistency | Apache Flink for real-time streaming | Enables accurate backfills and data corrections |

Serving Layer: Merges both layers for querying | dbt for SQL-based transformations | Suits evolving schemas and complex KPIs |

As protocols grow more complex, relying on a single monolithic indexer becomes a bottleneck.

Microservice architecture offers a scalable alternative by breaking indexing logic into smaller, independent services.

This model lets teams deploy and maintain indexers based on contract groups, chains, or specific event types, reducing overhead and improving fault tolerance.

Core Pattern | Tooling | Benefits |

|---|---|---|

Separate indexers for each contract group or logic type | Containerised deployments with Docker | Easier to manage contract-specific logic |

Services are emitted to a central bus or data store | Orchestration using Kubernetes | Scales dynamically based on protocol activity |

Logic is handled at the edge close to the sources | Message bus with Kafka or NATS | Reduces the blast radius from failures |

Best Practice: Use containerised deployments (Docker, Kubernetes) to scale indexers dynamically based on activity or priority.

In large DAOs and modular protocols, analytics needs vary across sub-teams. A centralised data team becomes a bottleneck.

The data mesh approach solves this by distributing ownership while maintaining consistency.

Each team manages its own data pipelines and domains but follows shared standards for schema, governance, and reporting. This enables autonomy without sacrificing alignment.

Core Pattern | Tooling | Benefits |

|---|---|---|

Each team owns and manages its own data domain | dbt with modular project structure | Enables team autonomy without central bottlenecks |

Shared standards for schema and metrics | DataHub for OpenMetadata | Improves data ownership and accountability |

Central governance ensures visibility | GitOps-driven pipelines | Scales across large DAOs or modular protocols |

Best Fit: DAOs with multiple working groups, protocols with modular architecture, or analytics platforms serving multiple stakeholders.

Why It Matters: With clear ownership and aligned standards, teams can iterate faster on their analytics needs, without breaking global reporting or governance visibility.

Blockchain data is spread across multiple layers. Some lives in calldata, some in state diffs, and some are generated off-chain by relayers or frontends.

Leading data pipelines combine all three sources to deliver complete, scalable, and verifiable analytics.

Hybrid indexing enables high-throughput applications to maintain performance while preserving trust guarantees using zero-knowledge proofs and modular data layers.

Core Pattern | Tooling | Benefits |

|---|---|---|

Mix on-chain logs, off-chain APIs, and zk-compressed snapshots | Archive RPCs for on-chain data | Handles high-throughput use cases (e.g., DePIN, gaming) |

Decode calldata, traces, and state diffs | GraphQL APIs for external sources | Reduces storage with verifiable compression |

Integrate external metadata like relayers or frontends | zkIndexing middleware (e.g., Lagrange, Succinct) | Ensures trustless data pipelines |

Teams increasingly integrate ZK middleware or rollup-native indexers to reduce storage while keeping data verifiability intact.

Good architecture sets the foundation, but execution makes it real. Now that we've mapped the design patterns, let’s break down how to build your pipeline, step by step.

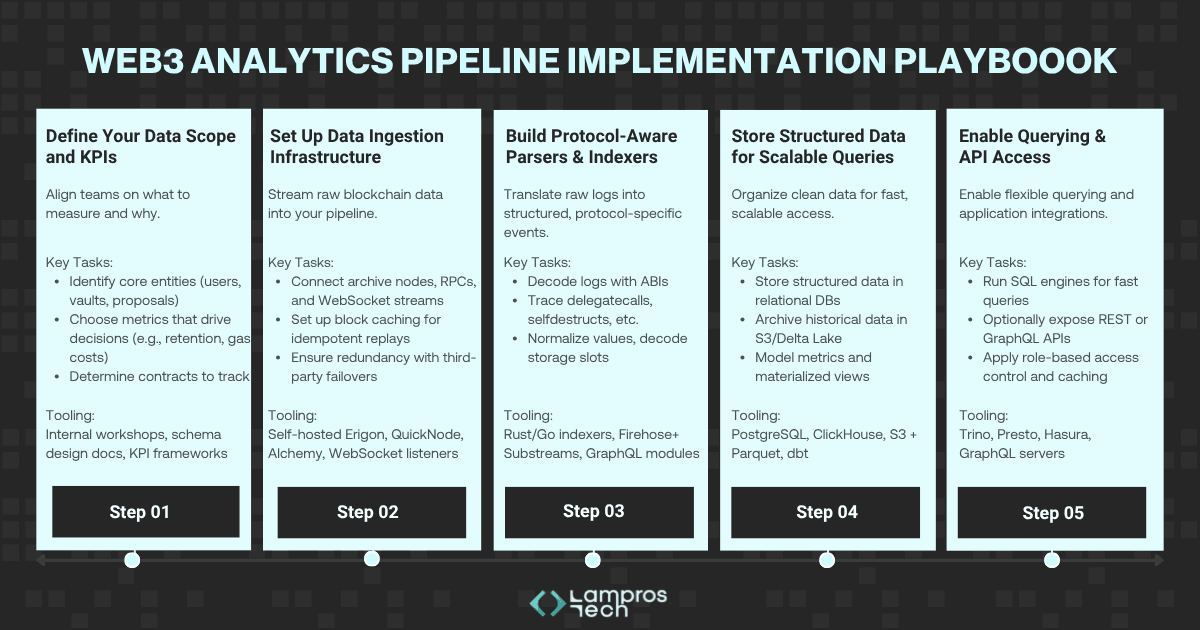

Building your own pipeline can seem complex. But like any robust system, it’s modular. Start small, validate fast, and scale with intent.

Here’s how high-performing teams structure the build process:

A well-built pipeline turns raw data into trusted decisions. But getting there means navigating real-world complexities that are fragmented chains, evolving contracts, scaling bottlenecks, and governance blind spots.

Before teams see clarity, they often wrestle with the mess. Here's what that journey looks like.

Web3 data provides unmatched transparency, but extracting value from it is far from simple.

From fragmented chains to contract quirks and infrastructure limits, building a reliable pipeline requires more than just tooling; it demands design choices that can handle evolving complexity.

From inconsistent log structures to scaling infrastructure, the road to a reliable pipeline is full of edge cases.

Here are the common challenges teams face and how to solve them.

RPC endpoints can drop logs, miss traces, or rate-limit calls. Mempool visibility is inconsistent. Event emissions vary by protocol version.

How to Overcome

Contracts change over time, new events are added, proxies are upgraded, and custom encoding patterns are introduced. This breaks your parsers and analytics if not handled.

How to Overcome

When your protocol hits a spike, new yield strategy, token launch, governance drama, dashboards lag, queries fail, and alerts become noise.

How to Overcome

Bridged assets, governance votes, or user activity often happen across chains and are hard to reconcile in one timeline.

How to Overcome

Once everything is being monitored, teams get buried in alerts, many of them low-value or redundant.

How to Overcome

Even with the right data flowing, teams often don’t act on it, either due to unclear ownership, lack of trust, or missing context.

How to Overcome

Selecting the right analytics tool isn’t just about features; it’s about context. What you need depends on what you’re tracking, how fast you need it, and who’s using the data.

To make that decision easier, we’ve created a comprehensive guide to the Top Web3 Data Analytics Tools to Use, organised by what they’re best suited for. The blog breaks down tools into six practical categories:

Whether you're building a DAO ops dashboard, debugging smart contracts, or tracking L2 performance in real time, this guide helps you map tools to your pipeline’s specific goals.

In the coming years, on-chain analytics will be as critical as protocol security. Teams that treat data as infrastructure, not reporting, will build faster, govern smarter, and ship with confidence.

Building your own Web3 analytics pipeline gives you more than visibility; it gives you leverage. The ability to track what matters, move faster than dashboards allow, and design systems that evolve with your protocol.

From ingestion to indexing, from real-time alerts to DAO insights, owning your pipeline means owning your decisions.

And while the stack can get complex, the principles stay simple: build modular, stay protocol-aware, and make every metric actionable.

If you're thinking about building or rebuilding your analytics system, do it with intent.

The teams that win in Web3 are the ones that see clearly.

FAQs

It’s a modular system that collects and processes on-chain data from blockchain networks. The goal is to turn raw logs into structured insights for querying, monitoring, and decision-making.

Off-the-shelf tools often miss reverted calls, custom events, and cross-chain flows. A custom pipeline gives full control over data quality, logic, and scalability.

Key layers include ingestion, indexing, transformation (ETL), storage, query access, and automation. Each plays a role in turning blockchain noise into signal.

Use internal mapping layers, normalize timestamps, and stitch user sessions across chains to track behaviour and events in a unified timeline.

Teams face schema drift, RPC gaps, usage spikes, and noisy alerts. Solving them requires good architecture, custom logic, and strong operational practices.